Your AI DMs Aren’t “Privileged”? Read This Before You Chat

I remember the first time a friend told me he’d “talked to ChatGPT like a therapist.” He said it so casually, the way you might admit to late‑night journaling, that I almost forgot we were talking about a chat window run by a company, not a paper notebook tucked in a drawer. That’s the trick of modern tools: they feel personal. The tone is friendly, the replies come fast, and in minutes you can slide from a simple recipe question into the messiest corners of your life. A glowing screen can make even a confession feel safe.

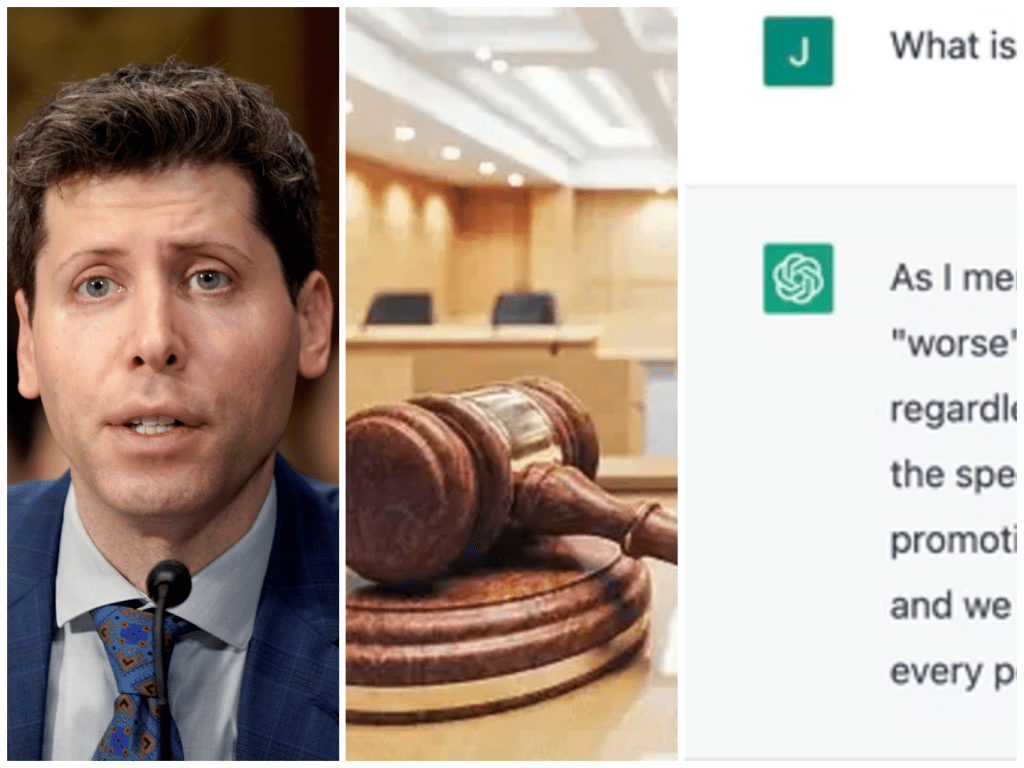

Then I read headlines quoting OpenAI CEO Sam Altman, explaining that conversations with ChatGPT are not legally confidential and could be used as evidence if a court compels it. In plainer words: an AI chat isn’t the same as talking to a lawyer or a doctor, because those relationships are protected by special rules. There is no “AI‑client privilege.” If a judge signs the right papers and the data exists, your messages can be requested and produced. Hearing that from the person who runs the company jolted the conversation out of rumor and into reality. It isn’t meant to scare anyone; it’s meant to stop us from giving a tool the kind of trust we only give to a professional bound by law.

Once you see it that way, a lot of things click into place. We already know texts can be screenshotted and emails can be forwarded. We know cloud documents live on servers we don’t own. AI chats live there too. Even if you hit delete in your interface, service providers can keep copies for a time to prevent abuse, fix bugs, or comply with regulations. Some business and education plans let administrators tighten retention or turn it off for certain uses, which is helpful. But those settings don’t magically turn a chat into a confidential therapy session or a legally privileged conversation. They just limit how long the provider keeps the data and who inside an organization can access it.

What makes this tricky is how normal and helpful AI feels. You don’t need an appointment. You don’t have to explain yourself to a receptionist. You can ask the “dumb” questions without blushing, and you get something thoughtful back in seconds. It’s easy to start pouring your heart out to a blank box that always answers. But the friend you’re talking to is a service. The words you type are stored on someone else’s machines. And the law treats those words like any other stored electronic communication. If a case is serious enough and the legal process is followed, those records can be requested.

I think about all the ways people quietly use AI already. Students ask for help drafting scholarship letters. Parents rewrite messages to ex‑spouses to keep the tone calm. Small business owners sketch contracts. People struggling with health anxiety ask what a strange symptom might mean. That last group is the one that sticks in my throat. When the stakes are high, advice isn’t enough; protection matters too. Doctors and therapists don’t just give answers. They give confidentiality anchored in law. A chatbot can give you a calm paragraph at 2 a.m., and sometimes that’s exactly what you need. But it can’t give you legal privilege.

None of this means AI has to be scary. It means we need the same common sense we learned with email and social media. If you wouldn’t write a secret on a postcard, don’t paste it into a public‑facing chat. Use AI freely for low‑stakes tasks and curiosity. Use business versions with clear data controls when your workplace depends on it. And for anything delicate—relationships, medical details, legal strategy—save the specifics for the people whose jobs include protecting your privacy by law.

There’s a broader, hopeful story here too. We’re still early. Rules evolve, and companies improve their products. It’s possible that lawmakers will eventually recognize a new kind of protected category for certain digital interactions, the way they once built privileges around therapy, medicine, and legal counsel. In the meantime, providers can help by offering retention‑off modes that are easy to find, by making privacy settings plain English instead of puzzle boxes, and by publishing regular transparency reports that show how often governments request user data and how those requests are handled. If the industry is going to become a daily companion for sensitive topics, it needs to earn that trust with design, not just promises.

I keep thinking about the accidental intimacy of a good chat. A few lines in, the assistant starts to sound like a patient, well‑read friend who never gets tired. It’s okay to admit that this can be a comfort. It’s also okay to say out loud that comfort isn’t the same as confidentiality. We don’t need to break up with our tools to keep our boundaries. We just need to remember that the safest rooms are still the ones guarded by a door the law recognizes.

If you’ve already typed something tender into a chat box and now feel a little sick, take a breath. Not every message is stored forever, and not every stored message is at risk. If you have a real legal or medical concern, speak to a licensed professional in your area and ask about next steps. Going forward, make a tiny pause part of your routine. Before you press send, ask yourself a simple question: do I want these exact words saved anywhere that isn’t mine? If the answer is no, change the words, change the channel, or change the audience.

The new rules of talking to a bot

The biggest lesson I’ve learned is that speed and safety don’t always travel together. A chatbot is wonderful when you need a quick summary of an article, translations for your trip, a gentle tone for an email, or a brainstorm for a stuck paragraph. It’s an extraordinary study buddy and an efficient writing coach. It’s less wonderful at being a vault. If you’re dealing with contracts, medical histories, or painful personal details, you aren’t just looking for information. You’re looking for protection. That’s the moment to step away from the glow of the easy answer and into a conversation that carries privilege.

For teams, the fix is mostly policy and habit. Treat AI chat like email or cloud storage. Write down what should never be pasted into it—client secrets, unreleased financials, health data. Point people to enterprise accounts that have retention controls if they genuinely need AI for sensitive drafting. Train everyone to check the data settings before they paste. None of that is dramatic, but it’s how you turn “we meant well” into “we stayed safe.”

For individuals, it’s even simpler. Use AI with joy for the thousands of harmless things it does brilliantly. When the topic moves from ordinary to intimate, trade the chat window for a human who can promise confidentiality in writing. And when in doubt, rewrite your question so it loses the identifying details and keeps the general idea. You still get the help, and you keep the personal parts personal.

I don’t think we should let fear drain the excitement out of what these tools can do. The right prompt can save an hour. A gentle rewrite can save an argument. A good explanation can save a grade. But the boundary matters. Fast answers are wonderful. Trusted confidentiality is something different, and right now it lives in different rooms. Sam Altman’s warning—and the policies most providers publish—are not meant to scold us. They’re signposts on a road we’re still learning to drive. Read them, believe them, and keep going, a little wiser than yesterday.

If I had to leave you with one picture, it would be this: think of an AI chat as a postcard moving through a very efficient, very modern mail system. Most postcards go exactly where you want. Most contain ordinary news, and that’s fine. But if the message could change your life in a courtroom, don’t write it on a postcard. Save it for the envelope the law already knows how to seal. That small difference—between a convenient tool and a protected conversation—can spare you a lot of trouble later, without taking away the magic of having a helpful voice whenever you need one.